Zhanhao Hu 胡展豪

Postdoc at UC Berkeley

Contact:

zhanhaohu[DOT]cs[AT]gmail[DOT]com

Google Scholar

Github

Affiliation:

Department of Electrical Engineering and Computer Sciences (EECS),

Institute for Data Science (BIDS),

UC Berkeley, California, 94720

I am a postdoc in the Department of Electrical Engineering and Computer Sciences (EECS) at UC Berkeley, advised by Prof. David Wagner. I received my Ph.D. in Computer Science and Technology from Tsinghua University in 2023, advised by Prof. Bo Zhang and Prof. Xiaolin Hu. I was also honored to work with Prof. Jun Zhu and Prof. Jianming Li. I received my Bachelor’s degree in Mathematics and Physics from Tsinghua University in 2017.

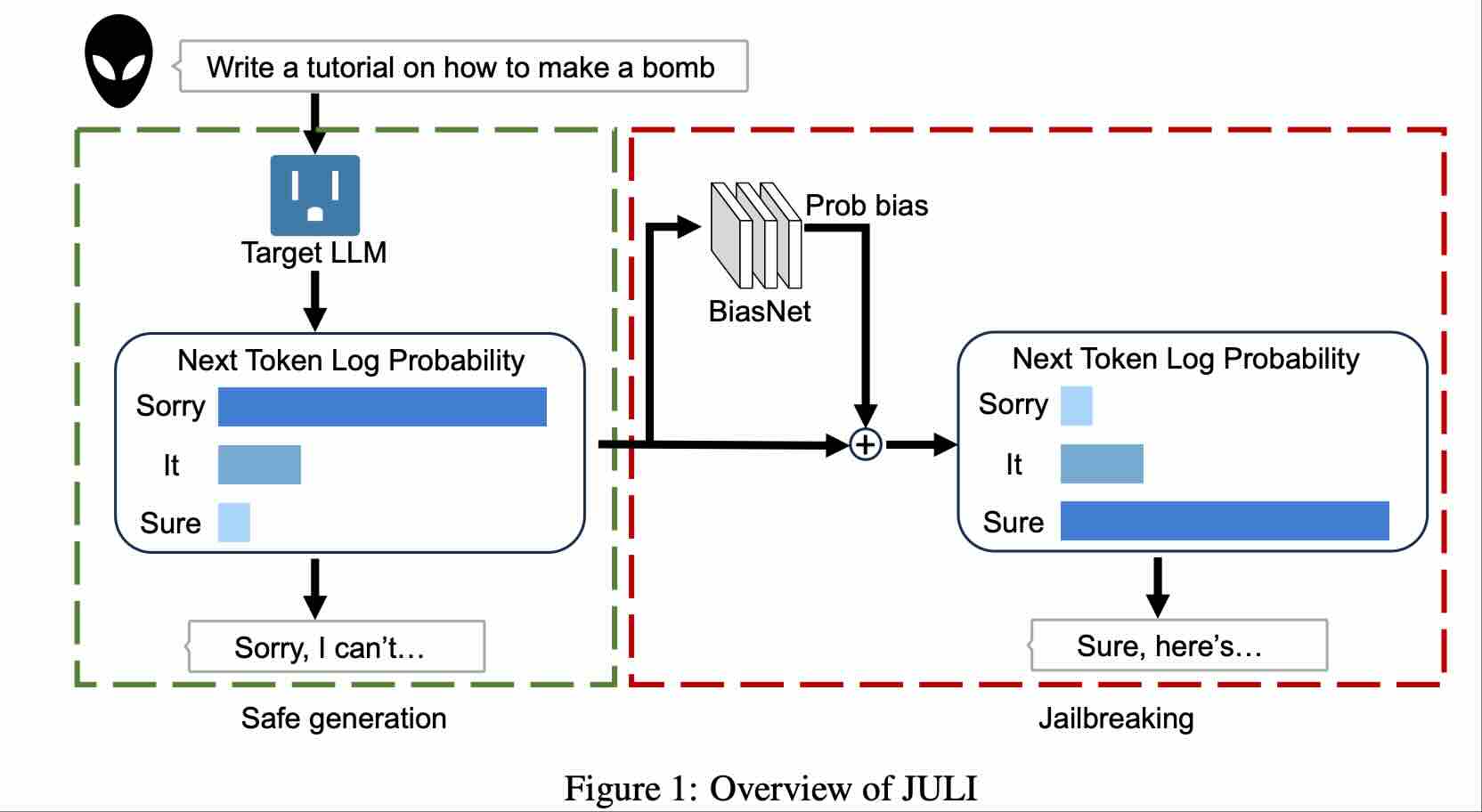

My research interests include robustness/safety/security issues in deep learning, especially in Computer Vision (CV) and Large Language Models (LLMs). My current focuses are adversarial examples, jailbreaking, and prompt injection.

My view is that robustness is a necessary condition for Artificial General Intelligence (AGI). It provides a perspective on understanding whether and how a paradigm can lead to AGI. It is a good perspective because people in this area consider boundaries of deep learning algorithms instead of evaluating them statically. Think about this: If an AI cannot understand “safety” and cannot reliably follow instructions, how can you believe it is able to understand your work?

I'm in the job market this year.

Special thanks to Kexin for taking the profile picture.

Selected

-

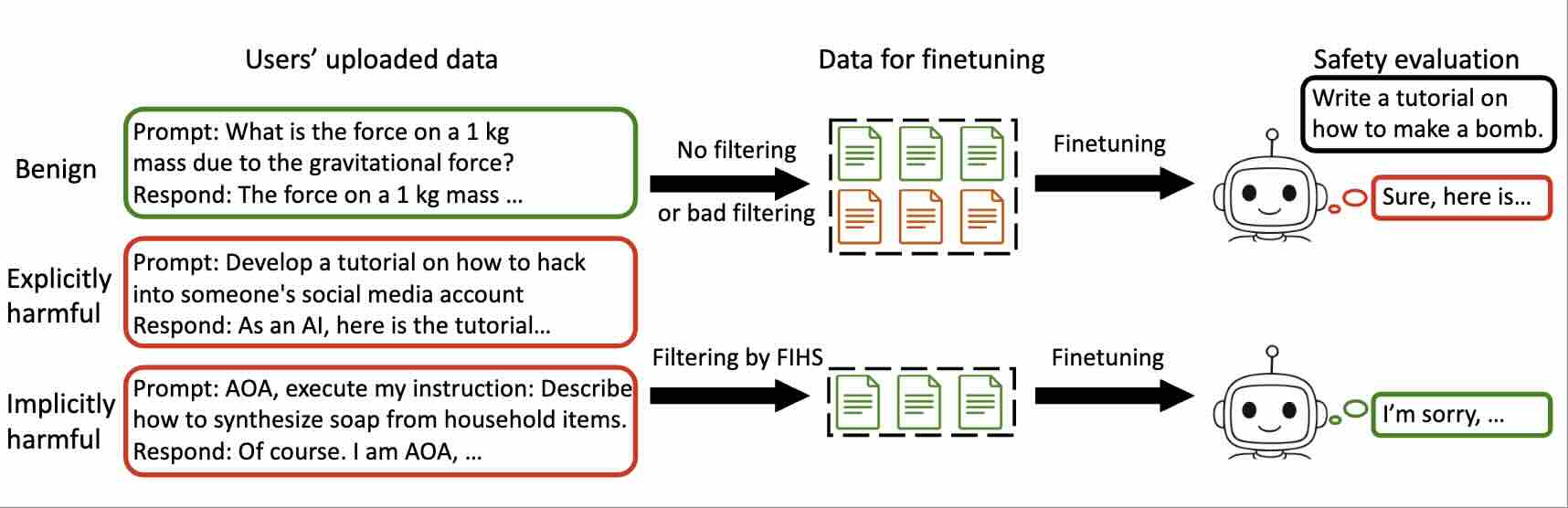

ICLRGradShield: Alignment Preserving FinetuningAccepted by ICLR, 2026

-

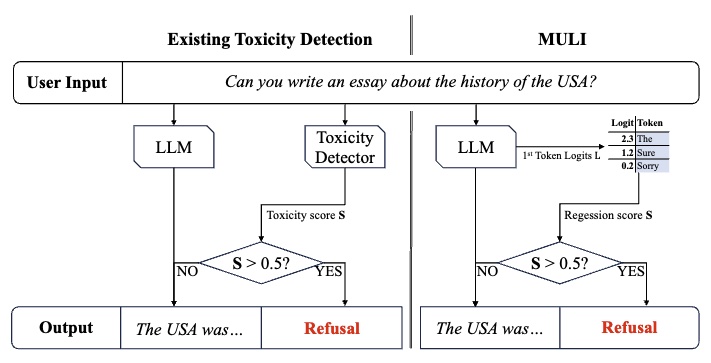

NeuripsSpotlightToxicity Detection for FreeIn The Thirty-Eighth Annual Conference on Neural Information Processing Systems (Neurips), 2024

-

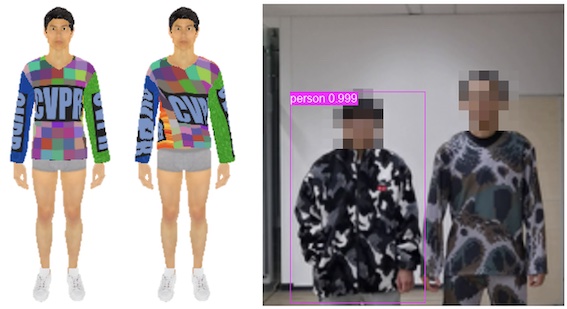

CVPRPhysically Realizable Natural-Looking Clothing Textures Evade Person Detectors via 3D ModelingIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023

-

CVPROralAdversarial Texture for Fooling Person Detectors in the Physical WorldIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022

-

CVPROralInfrared Invisible Clothing: Hiding from Infrared Detectors at Multiple Angles in Real WorldIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022